Anyone paying attention to the current state of AI is aware of the exploding need for compute resources. Almost inevitably, it seems like this demand has turned heads back towards the world of serverless and non-traditional compute options. While the pain points of developing and deploying ML models have been around for some time, it seems like the exorbitant compute requirements demanded by generative AI have finally pushed traditional methods to their breaking point.

A story of pain

Before really diving into the state of these platforms today, it’s important to understand the agony involved with the traditional process of hosting your own AI infrastructure. Speaking from personal experience, it may go something like this:

- You’ve got a new AI project. How exciting!

- But your laptop is small and pathetic. There’s no chance it will support you through this. You need horsepower. GPUs. You know what that means.

- There are probably cheaper, newer providers out there. You do not explore them. You probably have Stockholm syndrome. You return to your abuser. Hello https://us-east-2.signin.aws.amazon.com/

- You go to deploy a new instance in ec2, but you’ve run up against your service limits again. You request an increase. Maybe you even feel self important enough to call and beg some poor AWS customer support rep to make it quick.

- It takes at least a day because you are not actually important. But it’s finally ready.

- But wait! There’s more!

- Maybe if you were actually competent, you could figure out how to further optimize the resources you had. Unfortunately you are not. Instead you cry, make whoever has the unfortunate pleasure of being in your near vicinity concerned about your mental state, and finally, you convince yourself you need more compute.

- Repeat steps 4-7 several times.

- In the meantime, you didn’t forget that you will need to actually deploy the model right? You know, containerizing it, creating and exposing an api, CI/CD? Did we mention autoscaling? Monitoring? … Do you know how to do all that properly? Like really know how to do that?

- Several months pass. You are now running a p658d5billion xxxxlarge instance that charges you infinity dollars per day and has a cold start time of 5 days.

- You bankrupt your company with your AWS bill.

- You are now unemployed.

- You realize you are an ML engineer in the middle of an AI boom and go groveling to some VC firms for a job.

- Now you are an investor at Scale Venture Partners

-FIN-

Disclaimer: despite certain ~embellishments~, my old company is alive and well. I only joined VC to pursue my life-long dream of catalyzing entrepreneurial spirit 😉

New solutions in Serverless AI

But alas, if only I had known what I would miss out on! Many of today’s engineers have now discovered the wonderful land of not managing your own infrastructure. There is a growing number of companies that now enable you to run your models or other compute-intensive workloads on their managed cloud by offering “serverless” GPUs and other performant compute options.

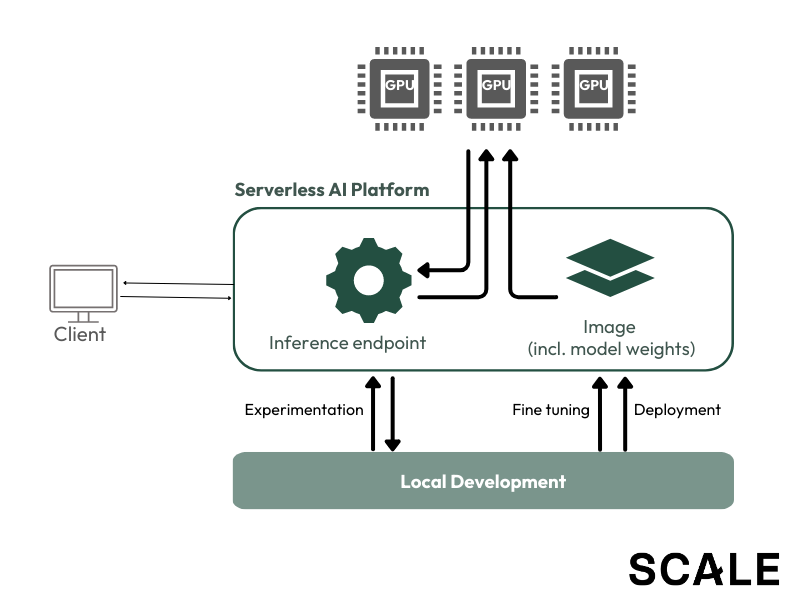

What exactly is meant by serverless in this context? Traditionally, a service (i.e. your model’s inference) would be deployed on its own dedicated servers and run 24/7 listening for requests on a certain port. In the world of serverless, however, you only pay for the time the servers run to service the requests, and you forgo much of the responsibility involved with managing the infrastructure yourself. While the actual implementation varies from provider to provider, this usually means that upon a request, the provider provisions the necessary machines and deploys the application. The application is run long enough to service the request, or requests, after which the machines eventually spin back down. This concept is what powers very popular services like AWS’s Lambda Functions.

Now, a new category of providers is bringing the concept of serverless to GPUs and thus, the world of machine learning. For many developers, this is a dream come true. With these new platforms, you can:

- Run and experiment with models and/or pipelines without leaving your local development environment

- Get an inference endpoint for your model in a few clicks

- Pay as you go (i.e. only per request/for the uptime of the server)

To be clear, that means you now do NOT have to deal with:

- Getting access to GPUs

- Building and deploying the model as a working service, and thus…

- Kubernetes

- API servers

- Permissioning

- Autoscaling

- CI/CD

- etc.

- Paying compute costs for an idle machine when there are no requests to service, which in the world of GPUs, add up quickly.

The players

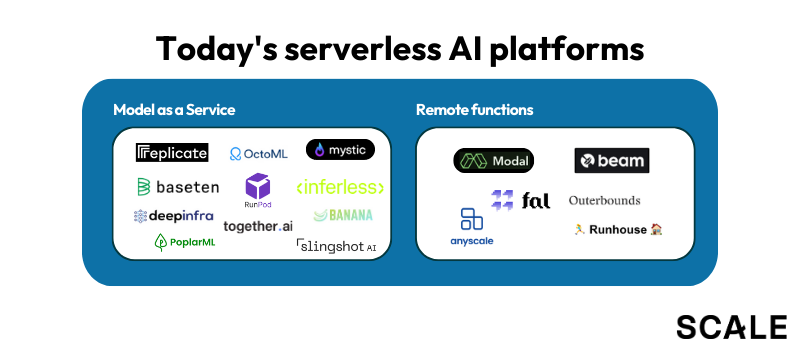

Where would one go to experience such magic? With the space rapidly accelerating, developers now find themselves with a number of options. Broadly speaking, the players fall into two rough categories:

Category 1: Model-as-a-Service

What: These platforms allow users to upload a custom model or use one off the shelf and provide an HTTP endpoint for inference with serverless compute. Compute resources will spin up on request and shut down on timeout.

How:

- Choose your hardware (E.g. H100, A100, A10G, etc)

- Option A: Use a standard model already defined on the platform. Some providers also allow fine tuning to customize these.

- Option B: Bring your own model (e.g., use any HuggingFace model)

- Shuffle your code into the various “standard” blocks that the provider requires (functions to, e.g., load model, call on inference requests)

- Write an image spec of dependencies, env vars, instructions for accessing the weights, etc. (e.g., YAML)

- Build and push image (alternative push code to provider’s build farm)

- Use the API inference endpoint provided

Who: Replicate, OctoML, Baseten, Banana, RunPod, Inferless, PoplarML, DeepInfra, Mystic, Together, Slingshot

Category 2: Remote functions

What: These platforms offer a serverless remote environment to run and deploy the functions that the local resources either cannot execute (e.g., no GPUs, too much parallelization) or will be painfully slow to execute. In most cases, these offeringings are built to cater to projects beyond just AI and enable developers to easily run and deploy any type of code in the cloud. In the case of deploying models, the end product can be an API endpoint, similar to that of above.

How:

- Modify the functions and function calls that run remotely. Usually, this will be by adding decorators to the function that specifies what hardware and image they run on.

- For deployment, within the executed code, provide an image spec of dependencies, env vars, instructions for accessing the weights, etc. (this might be done in a YAML or in the code depending on the provider), then access the deployment via API

- Some providers are not totally serverless and require defining (e.g., YAML) and managing a static pool where all the functions run (the pool can still start automatically and scale up and down to zero).

Who: Modal, Anyscale, Beam, Runhouse

Many of these companies were featured in our previous post, but even in the time since, we’ve already seen a handful of new players emerge and new products released.

Challenges

The current allure of these platforms is obvious. However, when it comes to the long-term business and retention of customers, there are a number of challenges many of these providers will likely have to confront.

- Margins

The unfortunate truth is that this is a market of resellers – an inherently margin-pressed business model often made worse by commoditization over time. In these markets, when devs realize the markups they are paying, the instinct is to compare alternatives. Without a significantly differentiated, additional SaaS product on top of the platform, companies often find themselves in a race to the bottom on price, and margins become unsustainable. That is not to say success is not possible – look at Vercel. The path, however, is not necessarily an easy one.

- Will AWS build this?

How trite, we know. And yes, basically any startup in the infrastructure space has to think about this. But with AWS Lambda Functions sitting right there, it’s hard not to think about how much they could eat away at the market were they to add in GPU capabilities. Moreover, incumbent risk goes beyond just the major cloud providers here. We also see moves from players like Nvidia reducing friction points between developers and access to performant compute resources. The big players have the ability to restrict compute resources to service providers as they see fit, and in a world of GPU scarcity, their grip can become frighteningly tight if they want it to be.

- Latency and the cold start problem

A somewhat unavoidable reality of the serverless paradigm. When you are spinning up resources on demand, there will inevitably be a small lag before you are able to service the first request. The platforms are working on driving these wait times down as we speak, but getting them near zero might be a prerequisite for certain production applications.

- Graduation

A cumulation of the previous points. Once companies mature, they may decide to invest in building out and managing their own infrastructure. For high-traffic applications, the cost savings of serverless likely do not outweigh its markups. If the major cloud providers continue to expand their offerings and serverless latency issues remain largely unresolved, companies are increasingly likely to get to a point where the flexibility, reduced costs, and performance of managing your own infrastructure outweigh the inconvenience of complexity.

Looking ahead

Despite challenges that may lie in the road ahead, we continue to eagerly watch (and use!) these rapidly-developing platforms. Above all else, they’ve successfully achieved something critical – getting developers back to where they want to be. Developers do not want to live in the console of cloud providers, they want to live in their code. The shift towards these more modern compute options underscores that desire for efficiency and agility, which seems to have become non-negotiable as AI continues to evolve at light speed.