Operating a Security Operations Center (SOC) is one of the toughest jobs in the enterprise, and over the last two years, LLMs have only made it even harder. Threat actors have moved quickly to adopt this new technology, using it to produce dozens of new and innovative attacks, such as hyper-personalized phishing emails, malicious LLM-generated scripts, and even pre-built agents that can execute custom attacks on-demand. Until recently, SOC Analysts have had to protect the enterprise against these new, AI-enabled threats using only yesterday’s tooling and processes. In other words, they’ve been bringing a SIEM to an AI fight.

However, with recent advancements in LLMs and agent frameworks, a copilot-like program for the SOC has finally become a possibility. In the same way that GitHub Copilot has grown to become a no-brainer for most forward-thinking engineering teams – and become a several hundred million ARR business along the way – I believe that nearly every SOC on Earth will be soon assisted by some form of AI Analyst. The stakes of a breach are far too high to go on without the added coverage, and the shape of the problem space lends perfectly to the capabilities of frontier models and frameworks. While attackers have certainly moved first in their adoption of AI, I’m quite excited to now watch the SOC fight back and believe that the stage has been set for a potentially generational outcome in cybersecurity: a platform that will properly enable the enterprise to fight AI with AI.

Understanding the SOC

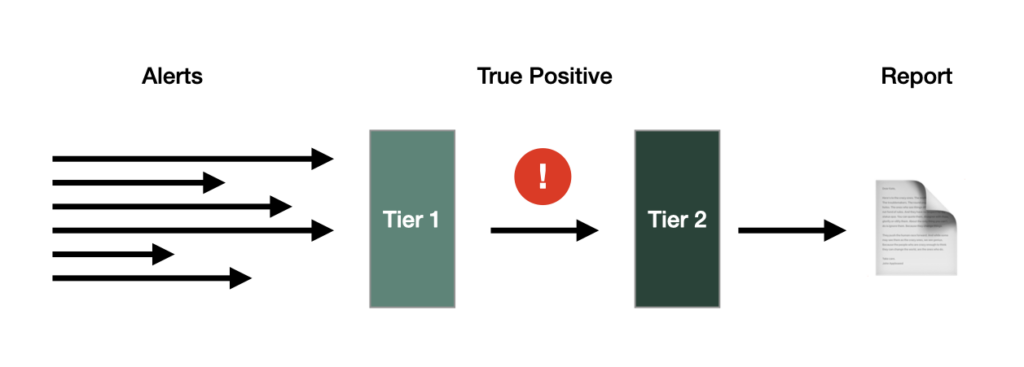

The role of the SOC is to monitor an enterprise’s infrastructure, applications, and security posture on an ongoing, 24/7 basis. The team is primarily tasked with responding to security incidents after they’ve already occurred, and will commonly be staffed by analysts that are divided into three groups: they are appropriately titled Tier 1, Tier 2, and Tier 3 Analysts.

Tier 1 Analysts

Tier 1 Analysts are the first line of defense, and are tasked with triaging every alert that comes through the front door. The main focus of this job is to land on a yes-or-no decision for each alert: “Is this an actual security threat? Yes or no?” In order to land on a verdict, the Tier 1 Analyst must first perform an investigation, which entails querying a variety of IT systems to gather data and support a decision. These analysts have one of the toughest jobs in the entire cybersecurity industry due to a combination of the following:

- Alert volume: Recent studies have suggested that the average SOC processes around ~4,500 alerts per day. SOC managers try to size their teams to meet this workload, but the volume is so great that the average SOC still only processes about ~50% of the alerts produced on any given day. This can result in a piling backlog of alerts, which obviously presents massive security risk.

- Environment diversity: These alerts pertain to a wide variety of assets, including but not limited to endpoints, cloud infrastructure, networks, identity platforms, emails, and collaboration tools. Each of these environments are capable of kicking off security alerts, and they each have a different “language” that needs to be understood, particularly when crafting queries for investigative work. Sometimes a single investigation will span multiple of these environments, and the mere act of context-switching between them can create substantial overhead.

- High stakes and time-sensitivity: To top all of this off, Tier 1 Analysts must be able to navigate this volume and complexity at high speeds and with high certainty. While SLAs vary per organization, it’s widely accepted that Tier 1 investigations should be resolved within 30 minutes of the alert being triggered, though I’ve spoken to some SOC managers who’ve maintained SLAs as low as five minutes. Bottom line: each of these alerts has the potential to be a legitimate breach, and the Tier 1 Analyst must reach a high-conviction verdict in a matter of minutes.

With this context in mind, it’s no surprise that Tier 1 SOC Analysts are among the highest-turnover jobs in the enterprise. Analysts tend to stay in the role for at most one to two years before graduating into a different role, whether inside or outside of the organization. Due to this high turnover dynamic, SOC managers are perpetually hiring to fill the inevitable gaps in the team, which creates a number of knock-on challenges: institutional knowledge that helps accelerate investigation time is constantly lost, maintaining high team morale is incredibly difficult, and SOC managers have to turn their attention away from security to spend time on non-differentiating administrative tasks.

The majority of the AI-enabled tools to emerge in the last year have been designed to assist the Tier 1 Analyst persona, and rightfully so. They need all of the help that they can get.

Tier 2 Analysts

If a Tier 1 Analyst determines that an alert is malicious, the alert and corresponding investigation get escalated up to a Tier 2 Analyst. While the Tier 1 Analyst typically has less experience and has to make a decision within minutes, the Tier 2 Analyst has more experience and more time. The primary responsibility of the Tier 2 Analyst is to conduct a deeper, broader investigation that validates the root cause of the incident. This person may also be required to generate a report of the findings, which would later be presented to auditors and regulators.

Tier 3 Analysts

Tier 3 Analysts spend most of their time on threat hunting, proactively searching for vulnerabilities and attack paths in the organization’s security posture. While Tier 1 and Tier 2 Analysts are inherently focused on reactive work (i.e. investigating an incident after it has already occurred), Tier 3 Analysts are more focused on proactive work (i.e. searching for things that potentially could go wrong, so that they can be fixed in advance).

Enter: The AI SOC Analyst

Prior to LLMs and agent frameworks, if you wanted to write a program that automated the work of a Tier 1 or Tier 2 Analyst, you would’ve had to write a static set of rules for the program to follow for each investigation. However, the nature of investigative work is that it requires you to be flexible so that you can adapt to the nuances of each case. To draw a parallel from other fields of investigative work, a good detective doesn’t just ask the same set of boilerplate questions to solve each murder mystery; they maintain a level of flexibility that allows them to stray off course when they pick up an interesting clue, thus giving each case the unique attention that it deserves. While rule-based programming cannot deviate behavior outside of the paths outlined in the application, LLMs enable the exact type of flexibility required to perform this previously human-operated task. Key unlocks here include capabilities like extracting variables from unstructured text, dynamically generating step-by-step plans for complex tasks, and generating bespoke code (or SQL) to execute these tasks.

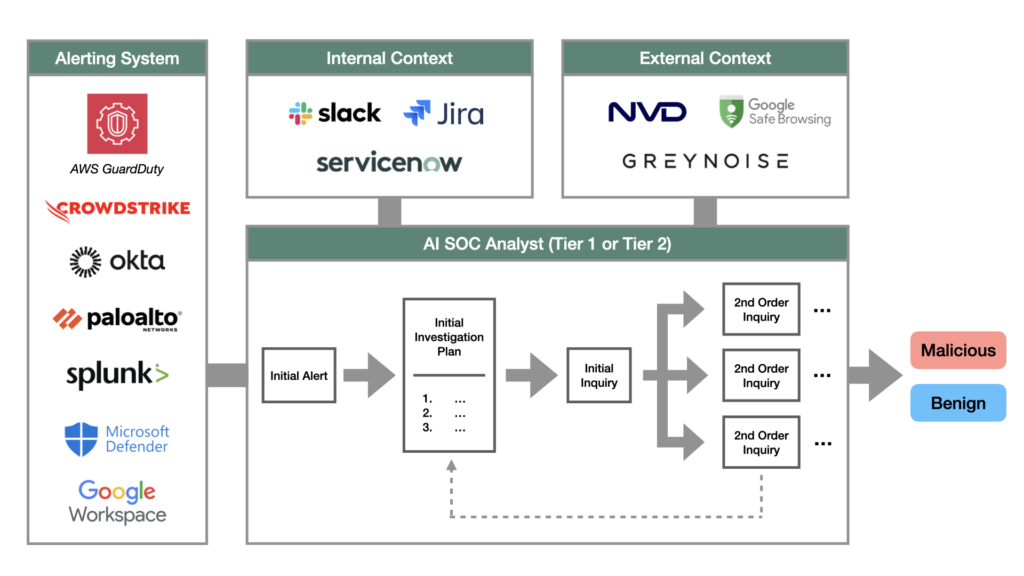

Armed with these new capabilities, we’re now seeing AI Analysts that can cleanly mirror the workflow and thinking of the highest-performing SOC Analysts. Investigations that previously required 30-60 minutes of a person’s time can now be completed by an AI Analyst in seconds. That workflow tends to look something like the following:

- Comprehend the alert: AI Analysts will start by ingesting alerts from a variety of domains, e.g. cloud infrastructure (AWS GuardDuty), endpoints (CrowdStrike, SentinelOne), identity (Okta), networks (Palo Alto Networks), SIEMs (Splunk, Panther), and more. Once an alert is ingested, the program needs to understand and extract the most essential details. For example, if the alert is something like: “IP address 92.37.142.153 attempted a suspicious login from Russia for user Shayan Shafii”, it should understand that the key variables from the alert are (a) the IP address, (b) the location it relates to, and (c) the user identity being targeted.

- Generate an initial investigation plan: Every investigation has to start somewhere. In the same way that a human analyst would have 2-3 things that they initially would check for, the AI Analyst needs to generate an initial plan of its own. Exciting startups like Prophet Security have published examples of investigation plans, which are often tailored to each environment. Meaning, the first things that you would check for on an identity alert are likely different from what you would check for an endpoint alert, and even within those domains, the initial plan can look different depending on the context of the alert. The bottom line is: you need to generate a queue of tasks that get the investigation started, and it should be tailored to each incident. Over the last 12 months, as agent frameworks have matured, task planning specifically has emerged as a common challenge that the engineering community is focusing effort on. ReAct, for example, is a popular method with academic backing; framework vendors like LangChain have also written extensively on the topic.

- Execute tasks one-by-one: The AI Analyst can then pop the first task off the queue and begin the investigation. Executing these tasks is where recent advancements in code generation really come into the fold. To execute pretty much any task on the investigation plan, the analyst — human or otherwise — must gather data that lives in other tools. Where a human analyst may be able to click around in a dashboard or UI, a computer program would need to generate API calls or SQL-like queries to gather that same information. These data sources generally fall into one of three buckets: (a) internal security tools (e.g. Okta, CrowdStrike, Palo Alto Networks), (b) internal knowledge bases that provide organizational context (e.g. Slack, Jira, Confluence, ServiceNow), and (c) external fact sources that provide security-related information about the outside world (e.g. the National Vulnerability Database). AI SOC Analysts need to be integrated with each of these environments in order to gather data and answer questions for each step of the investigation.

- Recursively adjust your plan: When reviewing findings from each task (i.e. the responses from the API calls), you ideally want to find something interesting. These are clues that help inform where you should steer the investigation next. If the program finds an interesting clue, it can create follow-up questions to ask, add them to plan, and diligence the trail of information accordingly. This makes the program execution similar to that of a depth-first search, where each node in the graph is a step on the investigation plan. To make a concrete example of this, let’s say that Okta kicks off an alert that there was a suspicious IP address associated with a login attempt. One of the initial steps of the plan might be to check Splunk for other login attempts with that IP. The AI Analyst could generate a Splunk query to gather all Okta logs with that IP, and in turn receive a response showing that there were 5,000 other attempts in the last hour. This would likely indicate some form of malicious behavior. Instead of continuing down the original investigation plan, the AI Analyst should adjust to this new information by forming a new question and adding it to the queue: If there were 5,000 attempts from this suspicious IP address in one hour, were any of them successful?

- Reach conclusions: The program can continue this loop of generating tasks, forming queries, reviewing responses, and reacting to the new information. Eventually though, it has to know when it’s cracked the case. Rather than spinning its wheels in an infinite loop, the AI Analyst must recognize when it has the information it needs to classify the alert as malicious or benign.

- Show your work: Lastly — and perhaps most importantly for buyers — the AI Analyst must granularly show how it reached its conclusion. This means showing each step of the investigation plan, the API calls that went into answering each question, and the API responses that came back. During vendor evaluations, SOC managers will often backtest the AI Analysts on historical alerts to see if they not only land on the same conclusions as human analysts, but also if they produce similar artifacts from their work. Just like math class, if you don’t show your work, you don’t get any points.

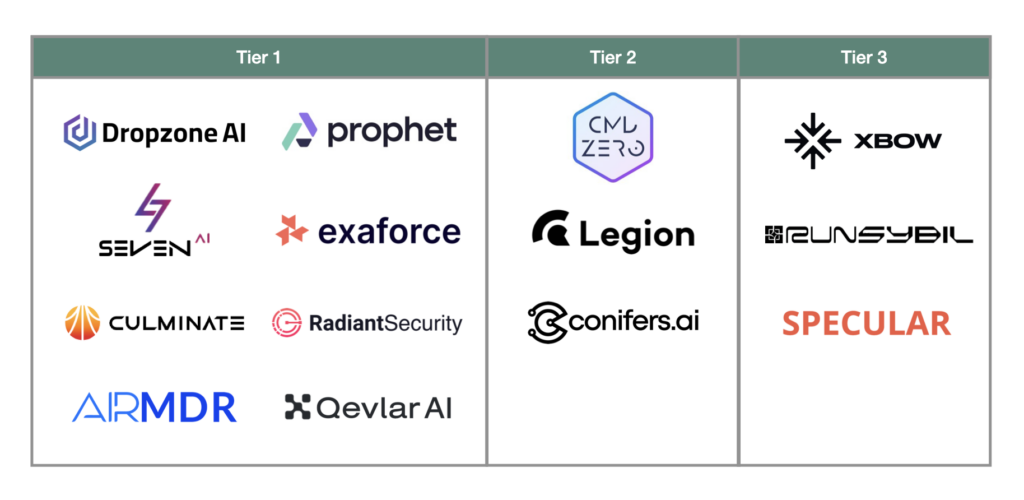

The above description is a high-level overview, and is by no means prescriptive. Given the rapid pace of innovation in agent-based design patterns, implementation details are likely to evolve, and the frontier of capabilities is likely to continue shifting outwards. While today I structure my view of the market in terms of support for Tier 1, Tier 2, and Tier 3 Analysts, these lines may also start to blur. Meaning, startups operating in the Tier 1 space may expand towards Tier 3, and vice-versa. What I do know is that there has been a sudden explosion of startups building in this space, which speaks to the scale of the opportunity and the recency of the technical unlock.

Companies above: Dropzone AI, Prophet Security, Seven AI, Exaforce, Culminate Security, Radiant Security, AirMDR, Qevlar AI, Command Zero, Legion Security, Conifers AI, XBOW, Runsybil, Specular

Note: While Tier 3 threat hunting is technically different from pen-testing, I have included emerging AI pentesting solutions above in the Tier 3 bucket, as the buyers may merge over time.

Open Questions

Level 5 Autonomy vs. Human-in-the-loop

In my conversations with founders and buyers, the room has consistently been divided on whether this is a problem that can be solved with software-only, or if you need a human-in-the-loop (HITL). Given the implementation described above, and the fact that many of these AI SOC Analysts are already deployed in production environments, there are certainly cases where a program can execute an investigation end-to-end. Multiple CISOs have told me that the software works “remarkably well” for the majority of cases. However, it seems that some of the more nuanced investigations can still require a HITL. I’d like to believe that as LLM performance continues to progress, agent frameworks continue to mature, and the problem space becomes better-understood, programs will eventually be able to triage 100% of Tier 1 alerts autonomously. This is roughly analogous to the 5 Levels of Autonomy for self-driving cars. In other words, if technology is the bottleneck, I believe that we will overcome that.

If, however, buyers actually prefer to maintain a set of human eyes on the problem, that is an entirely different matter. Many CISOs have suggested that the future will be a combination of Level 5 Autonomy alongside HITL, meaning that a program will autonomously triage Tier 1 alerts, while the role of the Tier 1 Analyst fundamentally changes to reviewing the decisions made by the software. In the case of Tier 2 Analyst support, some folks also have suggested that the correct approach is for a copilot-like tool that augments existing human analysts. Meaning, a person would continue to steer the investigation, but with the added help of a tool that can quickly answer questions for them. Overall, it remains to be seen which form factor receives the most demand in the market, and how the underlying technologies will evolve to support it.

How to sustain differentiation

Given the explosion of companies in the space, differentiation will be key. In a competitive bakeoff with two AI Analysts, how will a buyer choose one? And once you’re deployed with a customer, how do you retain them? A few dimensions come to mind:

- Accuracy: If you give the same set of alerts to two AI Analysts, which one will produce the correct answer more often? Similar to the last generation of ML-based detection and response companies (e.g. CrowdStrike, SentinelOne, and Abnormal Security), model improvement and network effects will play an interesting role here. There are two levers that companies pull to differentiate via accuracy: one is local improvement, and the other is global improvement. Local improvement is the process of landing in an environment, gaining more organizational context, and delivering improved accuracy specific to that customer, which increases switching costs. Global improvement is the network effect of landing many customers and extending the learnings from one customer onto another. In the long run, the standout winner will be a company that nails both of these motions (among other things).

- Mean Time to Recovery (MTTR): SOC teams are heavily measured on a single metric: the time it takes to close tickets. The lower the number, the better. If a product can reach conclusions faster, require less human intervention, and have additional product surface to drive workflows further downstream from the investigations, that could certainly move the needle.

- Depth of integrations: As you likely gathered from the diagram above, there is a tremendous amount of integration work required to build these products. Startups must build deep read and write integrations into alerting systems, internal knowledge bases, and external fact sources. In conversations with SOC managers, it’s become clear that support for these various environments can be make-or-break in purchasing decisions.

Competitive pressure from large security platforms

While I’m obviously excited about the startup activity in this space, there are a number of larger incumbents also introducing AI-enabled investigation products. See: CrowdStrike Charlotte AI, SentinelOne Purple AI, Microsoft Copilot for Security, and Tines Workbench. Given the widespread presence of these vendors, it comes as no surprise that many SOC managers are getting their feet wet with AI by trying these products first. I have two big questions over these offerings:

- If the market demands Level 5 Autonomy, will they achieve that? In most cases, when a large company “adds AI,” it amounts to just adding a chatbot, which still requires a human to ask questions. If the market persists with a mostly human-driven investigation process, then these tools are positioned well given their market presence and convenience. If, however, the market demands Level 5 Autonomy, these tools (in their current form) may be seen as adding limited value. In the self-driving car framework, they would be more akin to “cruise control” than Level 5 Autonomy.

- Will they expand their scope? Several CISOs have stated that they would prefer an independent AI SOC Analyst that reduces vendor lock-in and provides visibility across all domains (e.g. endpoint, identity, cloud, and more). Accordingly, these CISOs have had concerns about offerings from CrowdStrike, SentinelOne, and Microsoft, which increase vendor lock-in and lack visibility into other platforms. If each alerting platform rolls out its own chat bot, the investigation process would evolve into context-switching between various chat interfaces, which is not the step-function improvement promised by AI. With regard to scope, Tines Workbench does stand out for its visibility across domains.

Regardless of the outcome to the questions above, founders building AI Analysts will need to have a very clear plan for how they will land and coexist with some of the vendors outlined above. Buyer preferences for platform solutions vs. best-of-breed products will also come into the fold here.

Closing thoughts

Operating a SOC is a very, very tough line of work. In the last few months, every SOC manager I’ve spoken to has been wrestling with a firehose of alerts, low job satisfaction rates, and subsequently high turnover amongst staff. To top all of this off, we’re already paying millions of dollars for this outcome. SOCs are expensive, manual, and imperfect, but most importantly, they are business critical. The enterprise is collectively leaning on them to protect the company against every adversarial threat, and despite this, analysts are still clearly under-resourced. These recent advancements in AI present an opportunity for us to finally give SOC Analysts the support that they deserve.

While as a developer I have at times been skeptical of the capabilities of LLMs, the more time I spend with frontier models, agent frameworks, and SOC Analysts/managers, the more convinced I become that there is a tremendous opportunity here. I believe that the technology is capable of doing the work, and that the SOC would be one of the most fitting homes for it in the enterprise. If you’re a founder, engineer, or practitioner spending time in this space, I’d love to chat. Please feel free to drop me a note at shayan@scalevp.com.