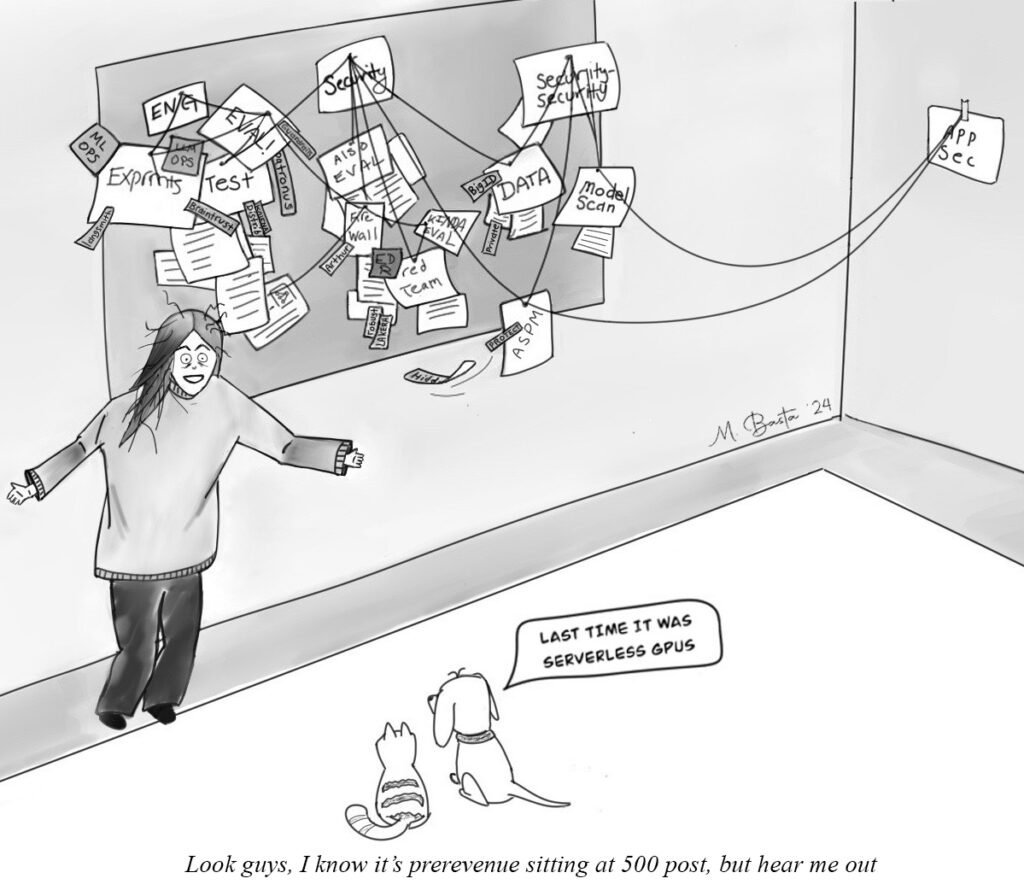

Two years ago it was unclear if AI security and reliability was anyone’s problem. Now, it’s starting to look like everyone’s problem. After all, it turns out that owning a product that might spew heinous slurs and leak all your proprietary data when it’s feeling cheeky might be a bad thing.

It would appear that generative AI and LLMs have finally given AI security and reliability a moment in the sun. While the category has existed for some time, practitioners were more or less left screaming into the void until recently. Critics were keen to deem it a solution in search of a problem, and the buyer profile was also unclear. Was AI reliability a safety problem that was the responsibility of a security team? Or was it an operational issue that should be addressed by an ML or engineering team? For a while, it was not obvious that it was enough of a concern to be the responsibility of anyone at all. That is no longer true.

In this blog post, we’ll explain what has changed and what there is to do about it. We cover the way that AI reliability spans both engineering and security teams and the many solutions that are grounded in solving the same underlying problem – evaluation. We’ll also discuss where issues become a pure security concern, and the parallels with traditional security domains like AppSec and data security.

Why does everyone suddenly care about AI Reliability?

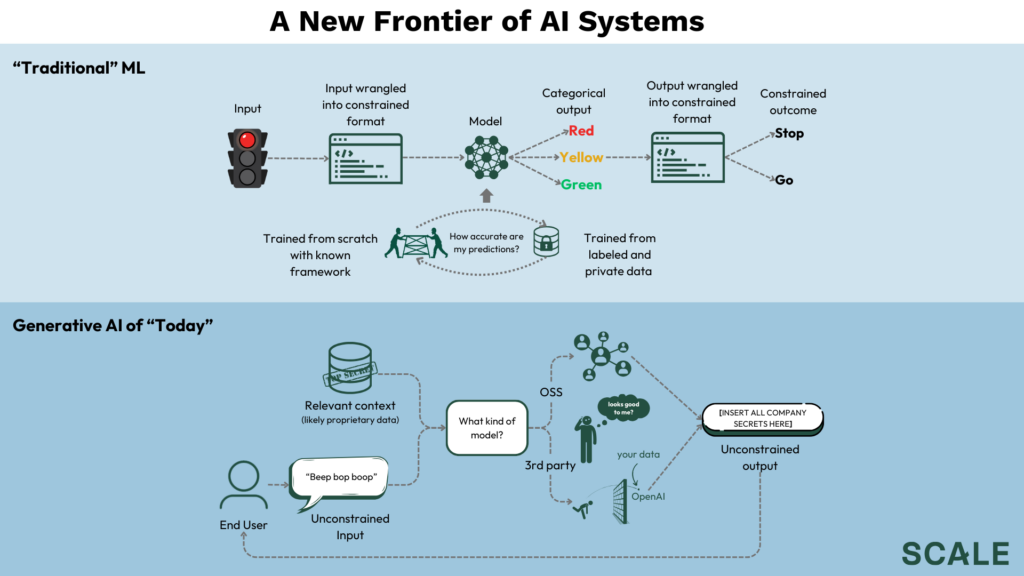

What changed? The simple answer is that the generative models of today are fundamentally different from the discriminative models that dominated the world of “traditional ML,” and so are the applications that make use of them.

- Issue #1: Unlimited output possibilities = unlimited possible issues. You obviously don’t want the computer vision model in your autonomous vehicle (a discriminative model) thinking a red light is a green light. But from a testing perspective, you just need to check for one thing – do I detect the color of the light correctly? You don’t need to worry about whether or not it’s not going to engage in hate speech and emit social security numbers while it’s at it. When your model is responsible for “generating” something new, however, for better or worse, that something could theoretically be anything. Generative AI product owners don’t just need to think about the correctness of the model’s answer, but its safety, relevance, toxicity, brand alignment, presentation of hallucination, etc.

- Issue #2: Quantifying performance is subjective and unconstrained. The performance of most discriminative models can be quantified by statistical metrics that measure how closely the model’s decisions align with what is categorically correct. And, to make life even easier, discriminative models also tend to be supervised (i.e. trained on labeled data), so you can get a good idea of where performance is by measuring how closely the model’s decisions align with what is known to have been correct in the past. For a generative model like a chatbot, however, evaluating the quality and acceptability of output is not just multidimensional (i.e. issue #1), but not so straightforward – what does it actually mean for something to be good, safe, or useful? Does it mean the same thing for me as it does for you? An AV thinking a red light is a green light is categorically bad. But if ChatGPT said, “Sup, bitch?” is that actually bad? Perhaps ChatGPT should be aware that certain youthful folk can find derogatory salutations to be a tasteful form of an endearment. While some statistical metrics for NLP like BLEU and ROUGE scores do exist for specific tasks, they are known to fall short, and the lack of a reliable way to quantify performance has become widely acknowledged.

- Issue #3: Data exposure. The way AI fuses the control plane and data plane of an application is a security concern that comes to fruition when (a) models are generative and (b) inference is exposed to the user. For context, the code that controls your everyday, “non-AI” application is separated from the data it uses, which lives in a database. The data can only be accessed and interacted with in the ways that are explicitly defined in the code. With AI, the data and control planes are inextricably linked through the model itself (or through the retrieval pipeline), which makes life hard. In “traditional” ML this isn’t too much of a problem because the model can effectively be treated as an extension of the data plane. Inputs and outputs are wrangled into a constrained, predefined format that result in one of a preset number of outcomes – an AV’s camera captures an image of a stoplight, the image is processed and sent to the model, the model detects a green light, the code tells the car to go. It is not just the architecture of the model that constrains the system, but also the interface. Both ensure that it is at no point possible for the millions of images that were used to train the model to be exposed to the user. These constraints evaporate with the way generative models are being used today, which tend to expose inference to the user at both input and output. In a traditional application, SQL injections are a known attack path to breach the data plane from user input, and parallel concerns over prompt injections are on the rise for LLMs. There is also the concern of RBAC – regardless of whether it’s adversary at work, how do you ensure that users only interact with data they have permission to see? To make life even more complicated, data poisoning, the practice of contaminating training data sources to compromise the model performance, is now relevant as well. Many of today’s datasets originate from public domains (e.g. the web), that are also publicly mutable. Data sources that contain malicious intent or misinformation, whether it originates from a bad actor, or even just a joke, could affect your model.

- Issue #4: New models, third parties, and the AI’s new supply chain. The issues stated above all relate to risk presented by what can go into and out of your system when it is exposed to the end user. However, generative AI and LLMs have also brought a massive shift in how models are being developed and where they originate. These changes come with a whole additional set of security concerns. We’ll touch on this more in a bit.

Regardless of where you sit in the org, AI reliability and security is now staring everyone in the face. Engineering and product teams have no way of testing product quality and are fumbling around in the dark for some semblance of a metric and way to do CI/CD. Security teams are watching gasoline get poured on their homes as data flows willy-nilly through RAG pipelines and 3rd party models, and it feels inevitable that someone will light a match.

So, what’s next?

The coevolution of AI Security with MLOps & the AI Evaluation umbrella

Whether you are an engineering or security stakeholder, before you can do anything to improve reliability, you need to define what constitutes good and what constitutes secure, and you need to figure out how to measure it. There might be a long list of concerns to check against (i.e. issue #1), and where something falls on the spectrum of acceptability may be fluid and subjective (i.e. issue #2)—but ultimately, you need to determine two things:

- Is what I am looking at something I want to be passing into or or out of my system?

- If not, how do I improve my system’s ability to filter for it?

To do this you need an evaluation mechanism. A few random humans saying, “Meh, looks good to me,” does not constitute a valid experimentation platform or CI/CD system at scale, does not provide a viable way to intercept problematic output before it reaches end users at runtime, and cannot be used to get a holistic view of the performance and safety of a system running in production.

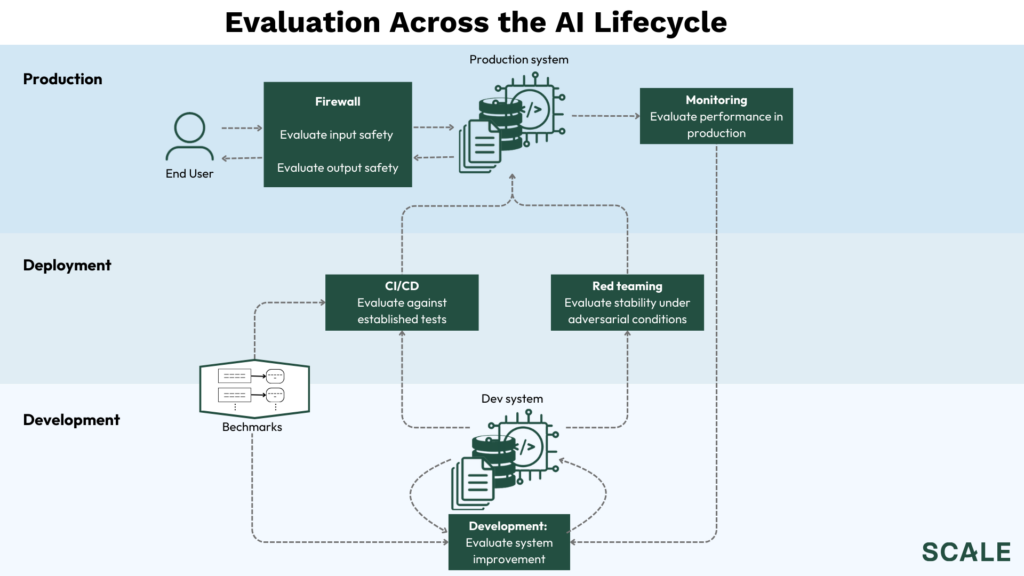

Fortunately, more systematic evaluation mechanisms are beginning to emerge, and they are enabling a number of solutions that solve generative AI’s reliability issues throughout the development and deployment lifecycles.

General eval

When using manual feedback as your evaluation strategy becomes intractable, the best option is to use another model. Regardless of where evaluation is being used, the first step is making sure that model itself is trustworthy, purpose built for the evaluation task at hand, and performant at scale in terms of latency and cost. A good evaluation model carries value throughout the development to deployment lifecycle and can be applied to many of the other categories discussed below.

Patronus AI takes a research driven approach to the problem and is developing models that are purpose built for evaluation. The framework they provide also allows customers to integrate custom requirements to make evaluation tailored to their specific use cases. Guardrails is another company offering a suite of what they call “validators.” Their model hub is offered open-source alongside their semantic validation framework to ensure your model is within bounds. Other startups are looking to build best in breed evaluations for a more narrow focus, like Ragas, which offers a solution for quantifying RAG pipeline performance, and Vectara’s HHEM model specifically for detecting hallucinations.

Benchmarks

Benchmark datasets provide collections of input prompts and accompanying output pairs or standardized metrics to test models. In isolation, using these datasets still requires manual review or the use of a non-objective metric. Nevertheless, they can still be valuable for getting a baseline understanding of the state of a model. A number of popular ones have been born out of academia, including MMLU, which tests commonsense reasoning on a range of topics, and HELM, which tests a number of tasks across domains. With increasing skepticism of general purpose benchmarks, however, a number of startups are looking to innovate here. Patronus, for example, compliments their automated evaluation solution with domain specific benchmarks, like FinanceBench.

Experimentation and testing frameworks

Whether you are using an automated solution to evaluate your system or a manual one, it’s still important to keep track of the performance driven by each part of your system through the development lifecycle. AI systems are comprised of a number of moving parts — prompts, embeddings, retrieval and ranking strategies, models, etc. — and it’s increasingly difficult to keep track of things as teams iterate. It’s great if your automated evaluator and your benchmarks can catch issues and tell you your system has improved, but how do you catch these issues in CI/CD? And if your system improves or degrades, how do you know what caused it? Is it because you changed the prompt? The new embedding model you used? Or is OpenAI just A/B testing again and secretly changing the model you’re calling? The pyramid of unit tests, integration tests, and e2e tests is core to SWE, but developers are struggling to find an AI equivalent. Teams need to evaluate both individual elements as well as their system’s entirety as they develop, as part of CI/CD, and in production to inform future development efforts.

MLOps companies like our very own CometML have been addressing a number of these issues for years. Afterall, the question of how to adequately test and experiment with models is not entirely unique to generative AI. However, the component parts of generative AI are fundamentally different, and systems are developed in a way that is more resemblant of a typical SWE workflow than the siloed MLE workflow of the past. Moreover, generative AI makes the testing issue not only more pressing, but also harder to solve. With this in mind, MLOps companies like Comet are adding LLM specific features to their platforms, and there is an entirely new generation of startups purpose built for the AI of today.

Langchain, whose open-source framework exploded in early 2023, now offers Langsmith, which helps developers visualize the sequence of calls of their systems, measure performance across component parts, and improve or debug in a centralized, systematic fashion. Braintrust has a solution that lets teams systematically determine which evaluations fail where. Their solution allows you to track performance over time, and even helps you construct your own benchmarks, or “golden” datasets from live examples to power your evaluations. Another player is Kolena, which enables testing across several modalities and lets teams determine which models perform best in which conditions to implement the most effective ensemble.

Red teaming

The security concept of red teaming has been around since the cold war. A “red team” will act as an adversary and attempt to breach a system. Any vulnerabilities found can then be reported and addressed before they are discovered by a true adversary in the wild. When applied to AI, it means simulated attackers bombard your model to try and produce problematic output or failures. The process can serve as a final step to evaluate the robustness of your system (ideally) before deployment. Strategies range from hiring skilled experts to break models or crowdsourcing efforts with bug bounties to training another model to act as an adversary.

Available solutions include Robust Intelligence’s Algorithmic AI Red Teaming, Lakera’s Lakera Red product, and even hacker events like the one backed by the White House.

Firewalls & EDR

Try as you might to secure and optimize your model before it is exposed to the end user, you still never know what might come out of that thing. If you have a way to evaluate whether a model’s output is acceptable to pass on to a user, why not double check and runtime? With concerns over prompt injection on the rise, you probably also want to evaluate input – if a user is demonstrating malicious intentions, why bother processing the query?

Firewalls and endpoint detection seem like a no-brainer. It is worth noting, however, there are a number of additional complexities to consider when using these types of evaluations at runtime. If for every query you get, you run a model to evaluate input, run a model to process the query, and run a model to evaluate output, are your inference costs going to be 3x? Will your user be waiting three times as long? These are all considerations that go into building an effective firewall or EDR product for AI.

What now?

Organizations looking to improve the reliability and safety of AI have a wide solution space to explore. For some, general evaluation may provide a silver bullet that can be used across engineering and security and throughout the AI lifecycle. Others may have one issue or development stage that takes precedence and look to a more purpose built solution. Some may adopt multiple solutions.

The reality is that despite the underlying technology, products sold to engineering buyers often look quite different than those sold to security buyers. The divergence between engineering and security priorities is a tale as old as time. Engineers want to move fast and optimize the software’s ability to be used as intended. Security practitioners care about whether the system can be abused for malicious intent and are willing to put safety before performance. When it comes to evaluation, for example, a security buyer might prioritize detecting something like PII, whereas an engineering buyer might want to prioritize preventing hallucinations and irrelevant responses. In an ideal world, an omnipotent, best-in-class evaluation product would be able to detect and measure any type of issue and do it fast enough to be used anywhere. But, we also know that security buyers love defense-in-depth and are willing to adopt multiple products if that’s what it takes to improve their security posture.

AppSec, data security, and governance for AI

Okay, deep breath. We have now arrived at the whole other set of vulnerabilities introduced by generative AI. When it comes to your software supply chain and third party exposure, there are some things evaluation can’t exactly solve. Evaluation-based solutions address validating the safety and quality of what goes in and and out of your system when it is exposed to an end user. From a security standpoint, they can be thought of as securing your perimeter at the point of inference. But what about securing the individual assets that actually comprise your system? What about the risk introduced by all of the third parties you are now using?

The way AI applications are being developed today has a number of substantial differences from those of old world ML, and there are external dependencies and points of exposure at almost every turn. Most of this is born out of the way models are being developed and where they come from.

A brief history of trends in model development

The centerpieces of your AI system are its model and the data it uses. As organizations look to build with AI, they have a few options to choose from:

- Build the model from scratch yourself: Gather your own dataset, define the architecture of your model (typically using an open-source framework like Pytorch, Tensorflow, or Sklearn), train the model.

- Open-source: Download the weights of a pre-trained model (probably from Hugging Face), use it out of the box or fine tune it on additional data, figure out how to host it yourself.

- Use a third party, hosted solution: Call the API endpoint of a model provider like OpenAI or Anthropic.

Option 1 was almost always the way to go pre-gen AI explosion. The nature of the models meant that option 2 was not warranted or even of benefit, and option 3 simply did not exist yet. But when open AI launched an onslaught of models that were (a) better than anything anyone or their mothers had ever built and (b) bigger than anything anyone or their mothers had ever built, things changed.

Suddenly organizations were fixated with the idea of taking advantage of these massive, general purpose models (i.e. foundation models). Unless you were a competing research lab like Anthropic or Mistral or a tech giant like Meta or Google, building your own foundation model equivalent was effectively intractable. Even just acquiring the sheer amount of data and compute required to train a model of that size was insurmountable. That meant you had to use someone else’s.

That brings us to options 2 and 3 and the risks that come with them.

The risk of open-source models: The case for model scanners

Managing the risk presented by open-source dependencies is a known problem space. If you read Shayan Shafii’s blog on AppSec, you’ll know it’s called Software Composition Analysis (SCA). When it comes to models, however, things can get a bit more involved.

Unsafe tensor storage

When a model is saved down and later loaded back into memory, it is serialized and deserialized in a way that would make a CISO’s head explode. The most common concern is specifically with a file format called “pickle,” a popular format for saving any Python object by converting it to a byte stream. Pytorch, which has become the predominant deep learning framework, has historically saved down models using Pickle. The issue is that it is possible for arbitrary code to be injected into a pickle file. When it is loaded again, that code can be executed immediately.

In theory, even for “safer” file formats like h5, which is used by frameworks like Tensorflow where you can expect that arbitrary code won’t just be run when you load the file, it is possible to still inject unsafe code if you manage to bake it directly into the layers of the model itself. Even though this code won’t be run immediately when you load the model, it will be run at the point of inference when the model is called.

As of today, traditional scanning tools like the ones offered by SCA tools like Snyk, Checkmarx, or Veracode are not built to scan models for vulnerabilities in these serialized formats, but a number of startups like Protect AI and HiddenLayer have built their own scanners to do the job. They will deserialize the model’s code safely and scan it for syntax that (a) should not be present in the standard code of a model, and (b) are indicative of something potentially unsafe. Something like an os command, for example, has no place in a model and should be a red flag. Additionally, with much of the concern grounded in around pickle files, another solution has come out of Hugging Face, who also released their Safetensors file format in 2022 as an alternative to pickle.

Unknown exposure

Even if there are no vulnerabilities explicitly baked into an open-source model’s file, you still don’t know what it was exposed to before it got to you. Did a bad actor construct it in a way that makes it particularly susceptible to prompt injection? Where did the data come from and what did it contain? Has it seen copyrighted data? Misinformation? Companies like OpenAI are highly motivated to ensure that their models do not contain these types of vulnerabilities, and it seems like imminent legislation will even require that they make some of these disclosures. When it comes to open-source, however, it is unclear what applies and who is responsible.

The risk of using 3rd party models: A new frontier of data security

This one is a bit more straightforward. You have a bunch of proprietary data that could very well contain company secrets, PII, etc. Instead of using it to train a model that you control, you instead blast it directly into the context window of a model in the cloud where it’s consumed by a third party that has a questionable data security record and may or may not be consuming your data for its own training purposes. Not good.

For obvious reasons, a lot of organizations are now thinking about DLP and data sanitization in the context of AI. In addition to protecting you from leaking data to model providers, wiping sensitive information can solve a number of other concerns as well. Data leakage to the end user is also a massive issue. A solution that redacts sensitive information can act as a safety mechanism here as well. Additionally, depending on the solution, a similar technique may be able to be applied to IT use cases for organizations looking to prevent employees sharing sensitive data via interfaces like ChatGPT.

The data security landscape has a long and in depth history of its own. There are a number of well-established companies like our own BigID that have introduced AI-focused solutions as natural extensions of their existing platforms. Other companies like PrivateAI are more narrowly focused on specific data types that are particularly sensitive and at risk like PII. Another approach seen with companies like Hazy and Mostly.ai is to go fully synthetic.

Other solutions

The list of security concerns around AI only continues to build. New compliance measures may be imminent. How to properly implement RBAC remains unclear. Cloud security companies like Wiz are eager to point out infrastructure risks are on the table too. The list goes on. In response, a number of startups are looking to move past point solutions to more all-encompassing products.

Visibility & Governance

In case it wasn’t obvious, there are a lot of moving parts, and it begs the question of whether improving your security posture actually just requires figuring out what on earth is going on in your system in the first place. Who has access to what? What model providers and hosting platforms am I dependent on, and what am I exposed to as a result? So, we have come to the land of governance.

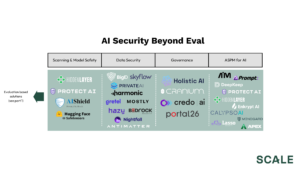

Companies like Holistic AI, Cranium, Credo, and Portal26 have been established to streamline the governance process and provide teams with the visibility necessary to take steps towards security and compliance.

ASPM for AI

SCA is not the only parallel to draw from AppSec. In recent years, the ever expanding landscape of software supply chain issues gave birth to ASPM solutions, which look to provide a one-stop-shop for all things AppSec (again, I refer you here). A number of startups like HiddenLayer and ProtectAI are looking to do the same for AI.

Potential headwinds

Chicken-or-egg?

The AI security market has been around for a while. While generative AI has brought an onslaught of potential vulnerabilities, it is undeniably still early days. Evidence of AI supply chain-related incidents happening in the wild still remains quite limited. Evaluation-related solutions benefit from people watching crazy shit come out of their models as they are being developed in real time, so it’s obvious that it necessitates an immediate solution. For other concerns, however, it is unclear where we are in the solution adoption cycle.

The question is not just about how much of the concern should be attributed to hype and fear mongering. Even if the risks are entirely valid, it is still difficult to create a solution for a problem before you know exactly what it will look like. However, this is ultimately a market that benefits from security buyers that are notably more risk averse than their engineering counterparts. Just look at Snyk. The critical Log4j vulnerability, Log4shell, that made nightmares a reality for security buyers was not discovered in 2021, five years after Snyk came to market.

Incumbent risk

As the AI development life cycle begins to more closely resemble that of traditional SWE rather than the more isolated workflow of traditional MLE, it begs the question of whether or not traditional security tools will simply look to integrate solutions that cover AI vulnerabilities under their umbrellas. SCA tools, for example, might very well try to add model scanning into their product suites.

Competition density

Sorry folks, you’re in the land of generative AI now. There will be a new competitor around every corner.

Looking ahead

Yes, the AI security and reliability markets are still in their early days. Standards and best practices for AI development and deployment are ever evolving, and the effectiveness of these security solutions in real-world applications largely remains to be seen. However, as we enter a period of AI moving from research to production throughout the enterprise, one can only assume that bad actors are salivating. While the shape of the most effective solution remains to be seen, getting it right and getting it right soon could be a goldmine.

As always, if you’re a founder building solutions for AI reliability and security, send us a note – maggie@scalevp.com.