LLMs have demonstrated phenomenal capabilities for content generation, which can be leveraged to increase productivity in tasks such as writing or coding. Many of the tools are seeing a huge inflow of individual professional users, some of whom are upgrading to team-level setups that leverage features like content-sharing. But what’s going to bring LLM apps to the next level?

We believe that the first wave of applications to enjoy enterprise adoption will focus on a narrower application of LLMs: enabling interactions through a natural (human) language interface. The prime example here is chatbots.

With that in mind, we figured that adding a chatbot to our own website would be valuable for two reasons. First, it would facilitate access to the long tail of information we present on our website, making it easier for founders to interact with us and understand our views. Second, we believe that forming our own investment thesis requires a deep understanding of the technology–something that can only be acquired by writing code to explore the solutions space.

In this blog post, we share the architecture of our chatbot and open-source the implementation, where most components are built from scratch using standard libraries like NumPy. We also share learnings from the development process, which incorporate iterations based on early user feedback since we added the chatbot to our website over a month ago. Reading about our experience as an example of LLM enterprise adoption might be helpful to founders building products in the space. Our example can also provide a practical framework for other companies who are directly working on a chatbot.

We have released all the source code for the chatbot. It is free to use, modify and distribute. We also included instructions for easy setup and customization. To avoid bloating this article, we’ll limit code snippets to some key illustrative parts of the codebase. As you go through the content, we strongly encourage you to check out the repository, especially if you’re looking to implement a similar setup.

Requirements

Companies looking to launch their own chatbot generally share similar requirements:

- Accuracy: The information presented needs to be correct. In most cases, this also means that the source needs to be quoted, so that the user (or someone supervising what was shown to the user) can know where the answer came from.

- Freshness: Information is constantly changing and needs to be incorporated into the model. While the frequency depends largely on the source of the data and the purpose of the chatbot, it’s generally the case that a stale chatbot does not meet its purpose.

- Speed: Our experience with early user testing was that speed was key to a good user experience. In particular, the critical factor to how fast the chatbot “feels” is the time-to-first-byte, i.e., how long it takes to start seeing words appear. The first versions of the chatbot felt slow because the steps prior to the final call to the LLM (which generates the answer) took too long.

- Operational simplicity: We are a VC firm trying to deliver a chatbot that scales and is highly-available, ideally without having to manage distributed systems or sign up for new vendors. Using a small number of simple dependencies allowed us to dramatically reduce time-to-market.

Architecture

The LLM is not enough

One theoretical architecture for a chatbot would rely exclusively on an LLM that can answer questions based on knowledge embedded in the model. Based on the above requirements, we believe that this is far from being the best solution in practice.

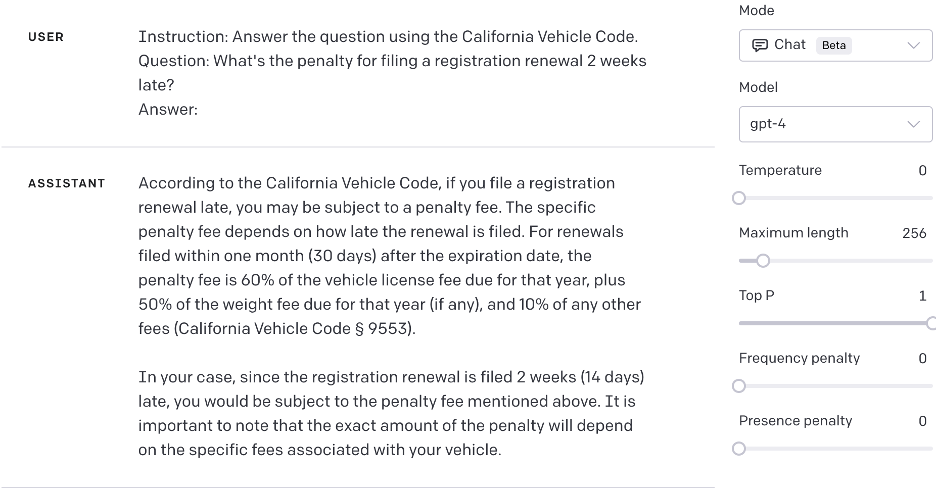

The following example is illustrative of why that might be the case:

The answer here is incorrect according to the California Vehicle Code (the DMV also lays it out more clearly). It also quotes a section that does not contain the answer to the question. This example should come as no surprise given that LLMs are known to hallucinate, but it shows how answers generated from model “knowledge” lead to unpredictable behavior even when using a state-of-the-art model (GPT-4) and minimizing randomness (temperature set to 0).

Fine-tuning doesn’t solve this: the model remains a black box with no guarantees on answer accuracy or traceability. And yet, this does not even begin to cover the set of challenges associated with fine-tuning, which also include producing a working dataset or maintaining knowledge freshness.

Agents to the rescue

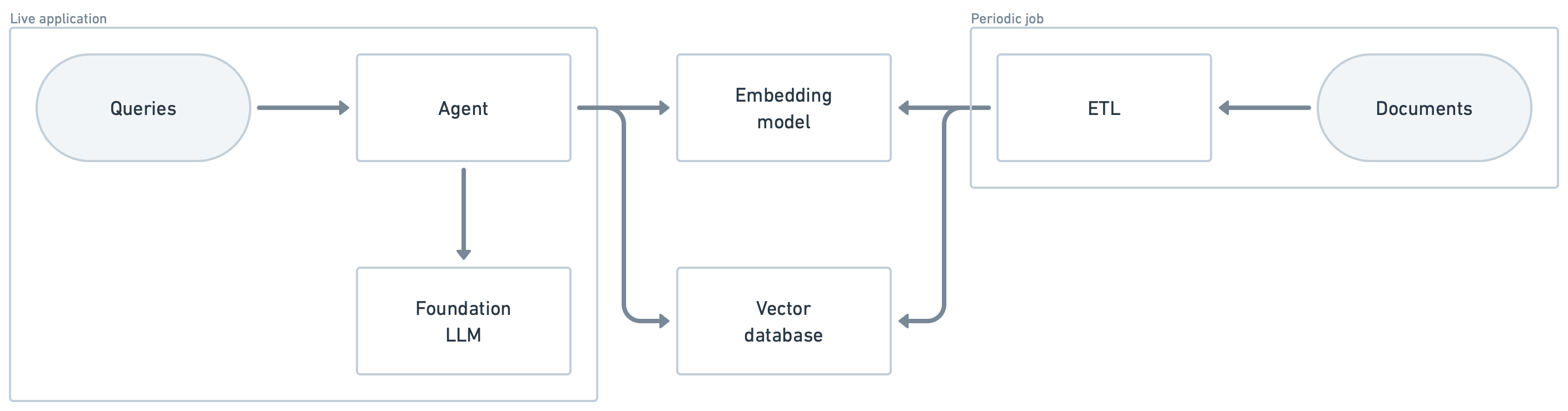

Consider the following architecture instead:

It makes use of the following components:

- An embedding model can be used to create a vector representation (an embedding) of a string of natural language. Conceptually, two strings of natural language are closer in the vector space when they carry similar meaning.

- A vector database enables the storage of embeddings along with metadata (generally the document that produced the embedding). The vector database enables nearest-neighbor search, that is, finding the closest documents to a search query.

- A foundation LLM (and possibly a small one) can be used in this context because the only requirement is the ability to handle language effectively.

- The live application is powered by an agent that executes calls to components like the LLM or the vector database. The agent can either follow a predetermined chain of actions or use the LLM to reason about what to do next.

- A periodic ETL job keeps the chatbot updated by ingesting new documents, applying relevant transformations (e.g., pagination), calculating embeddings and making changes in the vector database.

Implementation

Vector database

We wanted to maximize our understanding of the space as part of the development process, so similar to how every deep learning class begins with a NumPy implementation of AlexNet or Word2vec, we found it useful to start by building our own vector “database” from scratch. We then proceeded with extensive testing of existing implementations.

Ultimately, we came to the conclusion that the solution that best fit our time-to-first-byte and operational simplicity requirements was to keep using our homegrown solution, which consists of a single NumPy array with rows mapping to the various documents. We admit that we’re using the word “database” loosely: our (naive) implementation loads the whole dataset to memory, doesn’t support upserting or deleting (an update rewrites the entire store) and has no indexing capabilities.

It’s worth clarifying that the Scale chatbot answers questions based on information from our website. While we have published dozens of blog posts over the years, given the size of our organization and the standards we have set on public-facing content, the volume of data that our chatbot is dealing with is relatively small. Furthermore, we only expect updates to occur sporadically, incorporating new blog posts and updates about our team and investments. In this context, our solution is ideal because it returns query results quickly and is trivial to operate. We certainly wouldn’t claim that our use case is representative of every chatbot, but we do believe a lot of chatbots will have similar constraints (chatbots for, e.g., marketing, documentation).

You can see our implementation for writes and reads in the repository. As a highlight, we show here the simple operation for retrieval of top k documents:

| distance = lambda a, b: (a @ b.T) / (np.linalg.norm(a) * np.linalg.norm(b)) | |

| norms = distance(index["embeddings"], query_embedding) | |

| top_k = np.argpartition(norms, -k)[-k:] | |

| documents = list(itemgetter(*top_k)(index["documents"])) |

Agent

One of the main conclusions from the early user activity is that conversations have a complex context that is built over a series of questions and answers, which is needed to answer each subsequent question. Fortunately, in most cases, the answers exist directly in our source (the website) and relevant documents can be found with a single vector database query given the right question. As a result, our use case makes it possible to consider a predetermined chain of actions that use the following “tools” in order:

- A contextualization tool that uses an LLM to reformulate the question incorporating prior context (e.g., “who at Scale invests in generative AI” followed by “how do I contact them”). This step is skipped for the first question since there is no prior context.

- A search tool that queries the database with the reformulated question, using the `get_documents` function we defined in the previous section.

- An answering tool that combines the documents and the question to produce an answer. The instructions in the prompt also give the chatbot a personality, instruct it to answer using domain knowledge and the documents (avoiding other topics), and bias towards responding with a call to action (contact us).

We’ll admit to also using the term “agent” loosely. This chain would not work for queries that require iterative thinking (e.g., ReAct) but, similar to our database solution, we think it can serve many use cases that are similar to ours with a simple solution that introduces no additional dependencies other than the API calls to the LLM.

We defined the 3 tools described above as 3 functions that are called by an agent that runs behind a uWSGI server. As a highlight, we show here a simplified version of the agent:

| question = tools.contextualize(prompts["context"], chat) | |

| top_documents = tools.get_documents(question) | |

| answer = tools.answer(prompts["answer"], question, top_documents) | |

| for chunk in answer: | |

| yield chunk |

ETL job

It is clear that the LLMs can generate the right answer given a small prompt that contains the necessary information. This critical point is great news: by reducing the problem to one of retrieval of relevant documents, getting good answers from an LLM has been turned from a black box into a data science task. The direct implication is that the ETL job is where the magic happens. Like for any ML task, extracting the critical data and transforming it in the right way is what will make or break the model (or in this case, the chatbot).

Data scientists will explore the many ways to encode documents. Admittedly, there were many approaches that we may try in later iterations, including support for alternative retrieval mechanisms like document graphs. For now, we were able to achieve a satisfactory performance with a naive implementation that simply paginated our long-form documents (e.g., blog posts) and added context (e.g., title) to each page.

The code is pretty boilerplate, so we refer you to the full source code to see the detailed implementation. The repository’s README also contains instructions for modifying the dataset and a corresponding schema for document generation so that the chatbot is easy to customize.

The future of chatbots

In this blog post, we covered the end-to-end process of building a chatbot entirely from scratch (with the exception of the LLM). We strongly believe chatbots will play a significant role in a variety of use cases across every organization. In such an environment, the conclusions from this blog post reaffirm our conviction on four important bets.

First, the various components of the architecture we discussed here create important opportunities for innovation that improves performance and ease-of-implementation. A number of open-source projects and vendors have emerged in spaces like foundation LLMs, vector databases and agents. We think these solutions are necessary for complex projects that are beyond the scope of our implementation.

Second, architectures like the one presented here will be extended to deliver more functionality. For example, simply adding ASR and TTS enables chatbots to interact with customers over the phone. To give a second example, graph databases will likely also be part of some architectures because they provide support for certain queries that vector databases cannot handle. We think that chatbots are not just about a chat interface on a website or app, and more creative use cases will emerge as organizations seek to add human language interfaces.

Finally, and as it is evident from this content, the number of moving pieces to deliver a working chatbot is significant. We think that this creates a major opportunity for providers who can develop turnkey solutions that can be adopted by stakeholders who work in business functions and cannot afford a major engineering lift.

We’re really excited to see the rapid pace at which these bets are taking off. The focus of this article was to provide a technical deep dive, so we’ll leave the market map for a subsequent blog post. In the meantime, you can reach out to Javier Redondo (javier@scalevp.com) with any questions. If you’re a builder in this space and like our thesis, we’d love to hear from you!